图床作为用Markdown以及静态博客用户来说,这事一直是一个困扰。试过七牛云、阿里、腾讯的OSS服务,光研究价格表就头大:存储费、请求费、CDN流量费层层叠加,生怕哪天被刷流量,下个月花呗直接爆炸。

Backblaze B2 是一个云存储解决方案,类似于 Amazon AWS S3。 Backblaze 的云存储每个注册用户拥有 10G 免费空间以及每天 1G 的下载流量,上传流量不限。同时 Cloudflare 与 Backblaze 之间的流量不计费,用作为图床是完全足够了,就算超出免费额度,$0.006 GB/Month 的价格也很合适。

整体思路就是Backblaze B2私密桶存储图片,Cloudflare Worker 通过密钥实现认证访问 Backblaze B2 私密桶,并缓存图片。

1.创建 Backblaze 账号并创建存储桶

创建Buckets

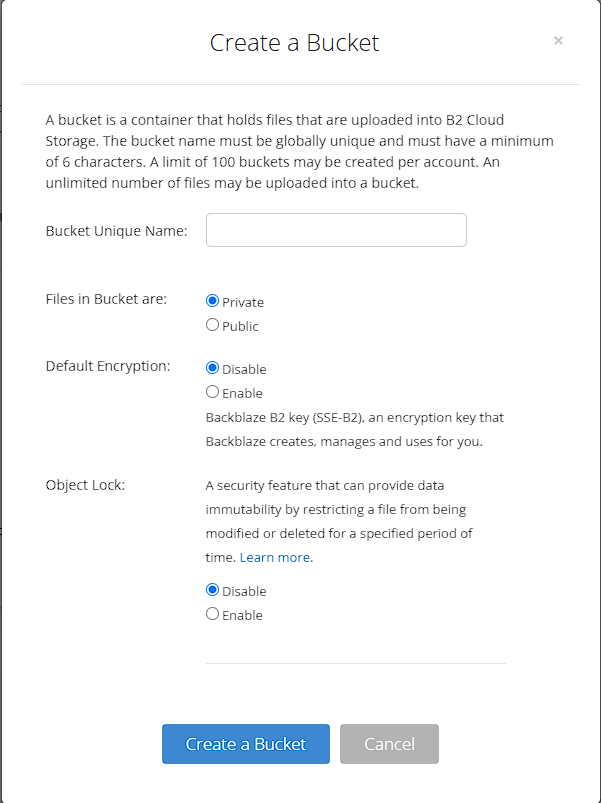

前往官网注册账号,点击左侧Buckets-Create a Bucket

填入桶名(建议使用UUID保证隐私安全),其余项保持默认即可,然后点击Create a Bucket

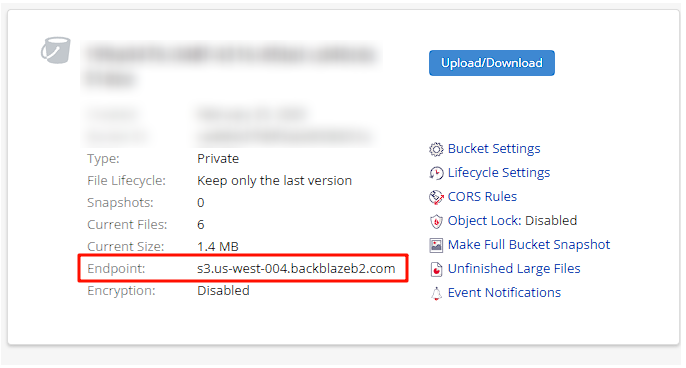

记录下Buckets的Endpoint,后边会用到。

配置Buckets

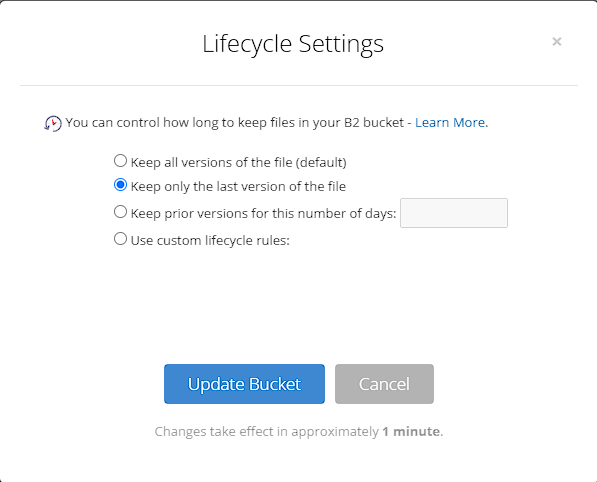

点击 Lifecycle Settings,选择 Keep only the last version of the file ,点击 Update Bucket 按钮。

点击 Buckets Settings, 在Bucket Info填入:

{"cache-control":"public, max-age=86400"}增加 Cloudflare 缓存命中率,86400表示缓存一天。

完成后点击Update Bucket。

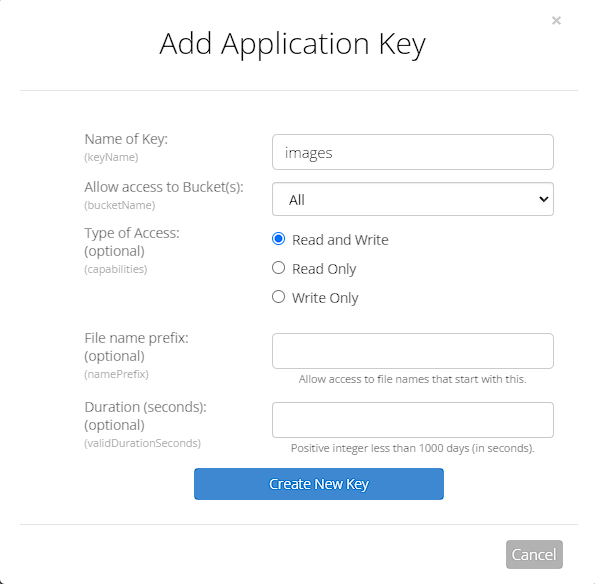

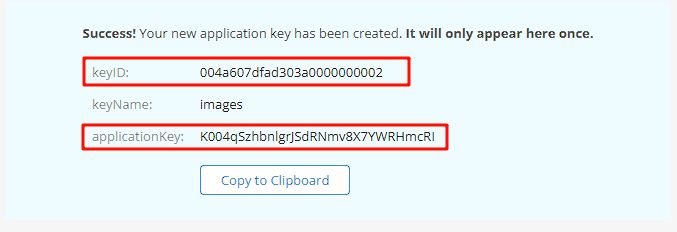

创建Application Keys

点击左侧菜单Application Keys,然后点击Add a New Application Key创建一个key,填入密钥名称,其余保持默认。

密钥创建好之后,请保存下来,**关掉就不再显示了!**后面会使用到。

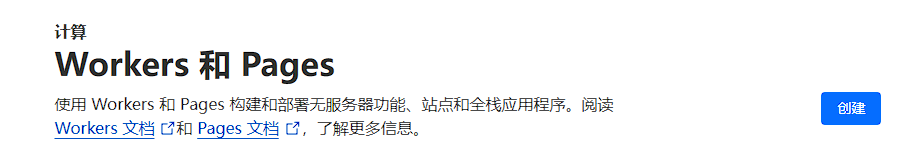

2.创建Cloudflare Worker

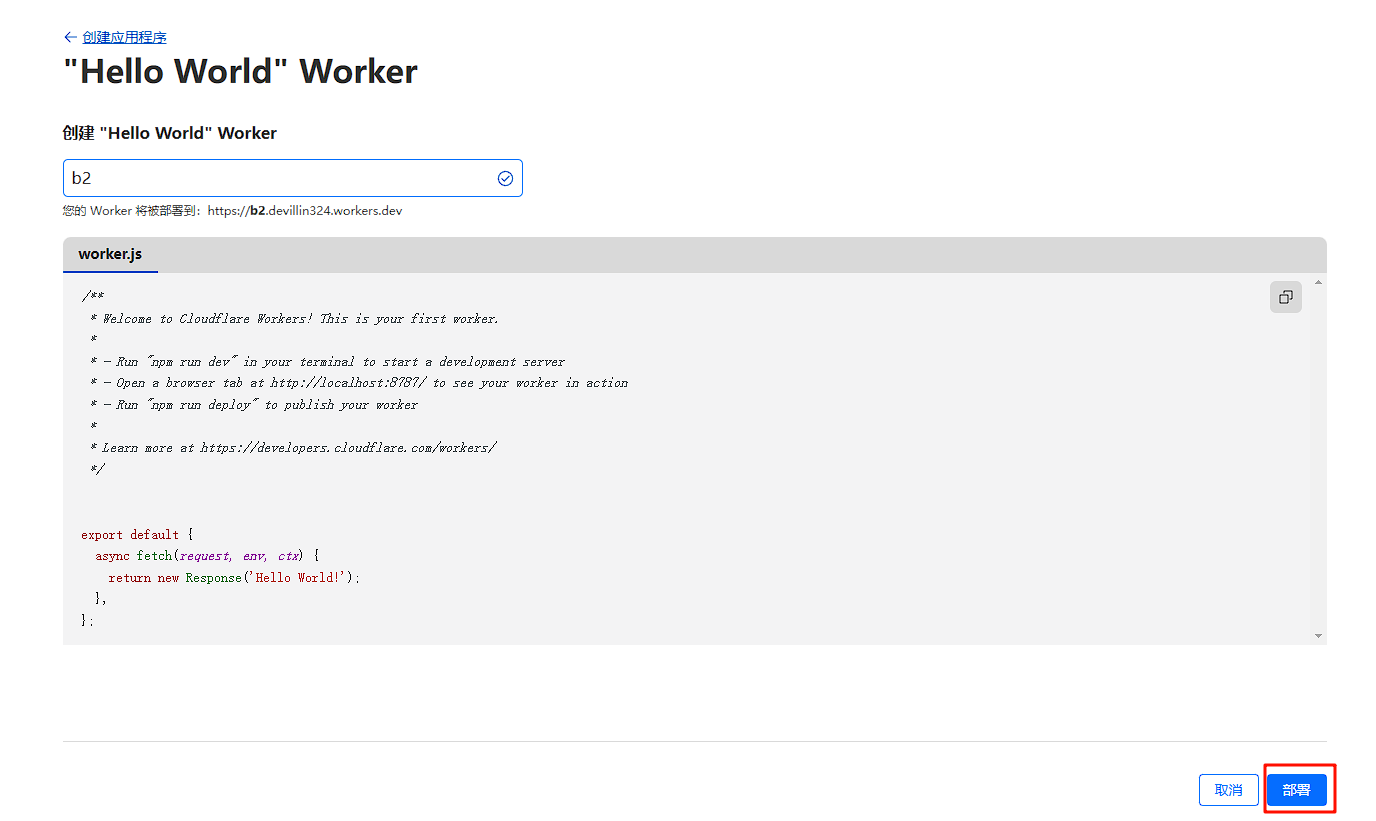

创建Worker

在Cloudflare左侧菜单中选择 Workers 和 Pages,点击创建-快速开始。

输入Worker名称,点击部署,一个简单的Worker就部署好了。

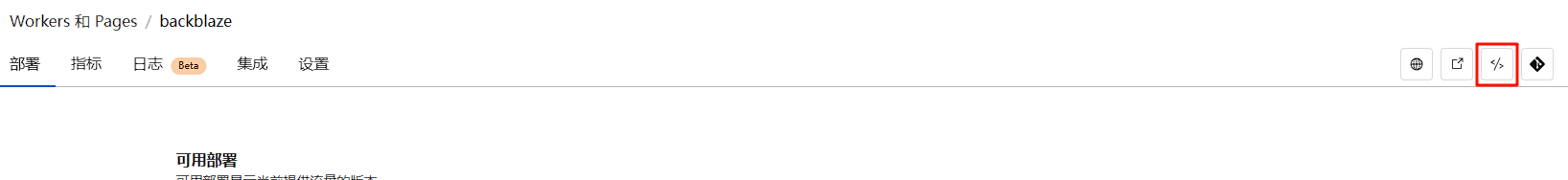

点击编辑代码,将以下代码替换默认的代码:

var encoder = new TextEncoder();var HOST_SERVICES = { appstream2: "appstream", cloudhsmv2: "cloudhsm", email: "ses", marketplace: "aws-marketplace", mobile: "AWSMobileHubService", pinpoint: "mobiletargeting", queue: "sqs", "git-codecommit": "codecommit", "mturk-requester-sandbox": "mturk-requester", "personalize-runtime": "personalize"};var UNSIGNABLE_HEADERS = /* @__PURE__ */ new Set([ "authorization", "content-type", "content-length", "user-agent", "presigned-expires", "expect", "x-amzn-trace-id", "range", "connection"]);var AwsClient = class { constructor({ accesskeyID, secretAccessKey, sessionToken, service, region, cache, retries, initRetryMs }) { if (accesskeyID == null) throw new TypeError("accesskeyID is a required option"); if (secretAccessKey == null) throw new TypeError("secretAccessKey is a required option"); this.accesskeyID = accesskeyID; this.secretAccessKey = secretAccessKey; this.sessionToken = sessionToken; this.service = service; this.region = region; this.cache = cache || /* @__PURE__ */ new Map(); this.retries = retries != null ? retries : 10; this.initRetryMs = initRetryMs || 50; } async sign(input, init) { if (input instanceof Request) { const { method, url, headers, body } = input; init = Object.assign({ method, url, headers }, init); if (init.body == null && headers.has("Content-Type")) { init.body = body != null && headers.has("X-Amz-Content-Sha256") ? body : await input.clone().arrayBuffer(); } input = url; } const signer = new AwsV4Signer(Object.assign({ url: input }, init, this, init && init.aws)); const signed = Object.assign({}, init, await signer.sign()); delete signed.aws; try { return new Request(signed.url.toString(), signed); } catch (e) { if (e instanceof TypeError) { return new Request(signed.url.toString(), Object.assign({ duplex: "half" }, signed)); } throw e; } } async fetch(input, init) { for (let i = 0; i <= this.retries; i++) { const fetched = fetch(await this.sign(input, init)); if (i === this.retries) { return fetched; } const res = await fetched; if (res.status < 500 && res.status !== 429) { return res; } await new Promise((resolve) => setTimeout(resolve, Math.random() * this.initRetryMs * Math.pow(2, i))); } throw new Error("An unknown error occurred, ensure retries is not negative"); }};var AwsV4Signer = class { constructor({ method, url, headers, body, accesskeyID, secretAccessKey, sessionToken, service, region, cache, datetime, signQuery, appendSessionToken, allHeaders, singleEncode }) { if (url == null) throw new TypeError("url is a required option"); if (accesskeyID == null) throw new TypeError("accesskeyID is a required option"); if (secretAccessKey == null) throw new TypeError("secretAccessKey is a required option"); this.method = method || (body ? "POST" : "GET"); this.url = new URL(url); this.headers = new Headers(headers || {}); this.body = body; this.accesskeyID = accesskeyID; this.secretAccessKey = secretAccessKey; this.sessionToken = sessionToken; let guessedService, guessedRegion; if (!service || !region) { [guessedService, guessedRegion] = guessServiceRegion(this.url, this.headers); } this.service = service || guessedService || ""; this.region = region || guessedRegion || "us-east-1"; this.cache = cache || /* @__PURE__ */ new Map(); this.datetime = datetime || (/* @__PURE__ */ new Date()).toISOString().replace(/[:-]|\.\d{3}/g, ""); this.signQuery = signQuery; this.appendSessionToken = appendSessionToken || this.service === "iotdevicegateway"; this.headers.delete("Host"); if (this.service === "s3" && !this.signQuery && !this.headers.has("X-Amz-Content-Sha256")) { this.headers.set("X-Amz-Content-Sha256", "UNSIGNED-PAYLOAD"); } const params = this.signQuery ? this.url.searchParams : this.headers; params.set("X-Amz-Date", this.datetime); if (this.sessionToken && !this.appendSessionToken) { params.set("X-Amz-Security-Token", this.sessionToken); } this.signableHeaders = ["host", ...this.headers.keys()].filter((header) => allHeaders || !UNSIGNABLE_HEADERS.has(header)).sort(); this.signedHeaders = this.signableHeaders.join(";"); this.canonicalHeaders = this.signableHeaders.map((header) => header + ":" + (header === "host" ? this.url.host : (this.headers.get(header) || "").replace(/\s+/g, " "))).join("\n"); this.credentialString = [this.datetime.slice(0, 8), this.region, this.service, "aws4_request"].join("/"); if (this.signQuery) { if (this.service === "s3" && !params.has("X-Amz-Expires")) { params.set("X-Amz-Expires", "86400"); } params.set("X-Amz-Algorithm", "AWS4-HMAC-SHA256"); params.set("X-Amz-Credential", this.accesskeyID + "/" + this.credentialString); params.set("X-Amz-SignedHeaders", this.signedHeaders); } if (this.service === "s3") { try { this.encodedPath = decodeURIComponent(this.url.pathname.replace(/\+/g, " ")); } catch (e) { this.encodedPath = this.url.pathname; } } else { this.encodedPath = this.url.pathname.replace(/\/+/g, "/"); } if (!singleEncode) { this.encodedPath = encodeURIComponent(this.encodedPath).replace(/%2F/g, "/"); } this.encodedPath = encodeRfc3986(this.encodedPath); const seenKeys = /* @__PURE__ */ new Set(); this.encodedSearch = [...this.url.searchParams].filter(([k]) => { if (!k) return false; if (this.service === "s3") { if (seenKeys.has(k)) return false; seenKeys.add(k); } return true; }).map((pair) => pair.map((p) => encodeRfc3986(encodeURIComponent(p)))).sort(([k1, v1], [k2, v2]) => k1 < k2 ? -1 : k1 > k2 ? 1 : v1 < v2 ? -1 : v1 > v2 ? 1 : 0).map((pair) => pair.join("=")).join("&"); } async sign() { if (this.signQuery) { this.url.searchParams.set("X-Amz-Signature", await this.signature()); if (this.sessionToken && this.appendSessionToken) { this.url.searchParams.set("X-Amz-Security-Token", this.sessionToken); } } else { this.headers.set("Authorization", await this.authHeader()); } return { method: this.method, url: this.url, headers: this.headers, body: this.body }; } async authHeader() { return [ "AWS4-HMAC-SHA256 Credential=" + this.accesskeyID + "/" + this.credentialString, "SignedHeaders=" + this.signedHeaders, "Signature=" + await this.signature() ].join(", "); } async signature() { const date = this.datetime.slice(0, 8); const cacheKey = [this.secretAccessKey, date, this.region, this.service].join(); let kCredentials = this.cache.get(cacheKey); if (!kCredentials) { const kDate = await hmac("AWS4" + this.secretAccessKey, date); const kRegion = await hmac(kDate, this.region); const kService = await hmac(kRegion, this.service); kCredentials = await hmac(kService, "aws4_request"); this.cache.set(cacheKey, kCredentials); } return buf2hex(await hmac(kCredentials, await this.stringToSign())); } async stringToSign() { return [ "AWS4-HMAC-SHA256", this.datetime, this.credentialString, buf2hex(await hash(await this.canonicalString())) ].join("\n"); } async canonicalString() { return [ this.method.toUpperCase(), this.encodedPath, this.encodedSearch, this.canonicalHeaders + "\n", this.signedHeaders, await this.hexBodyHash() ].join("\n"); } async hexBodyHash() { let hashHeader = this.headers.get("X-Amz-Content-Sha256") || (this.service === "s3" && this.signQuery ? "UNSIGNED-PAYLOAD" : null); if (hashHeader == null) { if (this.body && typeof this.body !== "string" && !("byteLength" in this.body)) { throw new Error("body must be a string, ArrayBuffer or ArrayBufferView, unless you include the X-Amz-Content-Sha256 header"); } hashHeader = buf2hex(await hash(this.body || "")); } return hashHeader; }};async function hmac(key, string) { const cryptoKey = await crypto.subtle.importKey( "raw", typeof key === "string" ? encoder.encode(key) : key, { name: "HMAC", hash: { name: "SHA-256" } }, false, ["sign"] ); return crypto.subtle.sign("HMAC", cryptoKey, encoder.encode(string));}async function hash(content) { return crypto.subtle.digest("SHA-256", typeof content === "string" ? encoder.encode(content) : content);}function buf2hex(buffer) { return Array.prototype.map.call(new Uint8Array(buffer), (x) => ("0" + x.toString(16)).slice(-2)).join("");}function encodeRfc3986(urlEncodedStr) { return urlEncodedStr.replace(/[!'()*]/g, (c) => "%" + c.charCodeAt(0).toString(16).toUpperCase());}function guessServiceRegion(url, headers) { const { hostname, pathname } = url; if (hostname.endsWith(".r2.cloudflarestorage.com")) { return ["s3", "auto"]; } if (hostname.endsWith(".backblazeb2.com")) { const match2 = hostname.match(/^(?:[^.]+\.)?s3\.([^.]+)\.backblazeb2\.com$/); return match2 != null ? ["s3", match2[1]] : ["", ""]; } const match = hostname.replace("dualstack.", "").match(/([^.]+)\.(?:([^.]*)\.)?amazonaws\.com(?:\.cn)?$/); let [service, region] = (match || ["", ""]).slice(1, 3); if (region === "us-gov") { region = "us-gov-west-1"; } else if (region === "s3" || region === "s3-accelerate") { region = "us-east-1"; service = "s3"; } else if (service === "iot") { if (hostname.startsWith("iot.")) { service = "execute-api"; } else if (hostname.startsWith("data.jobs.iot.")) { service = "iot-jobs-data"; } else { service = pathname === "/mqtt" ? "iotdevicegateway" : "iotdata"; } } else if (service === "autoscaling") { const targetPrefix = (headers.get("X-Amz-Target") || "").split(".")[0]; if (targetPrefix === "AnyScaleFrontendService") { service = "application-autoscaling"; } else if (targetPrefix === "AnyScaleScalingPlannerFrontendService") { service = "autoscaling-plans"; } } else if (region == null && service.startsWith("s3-")) { region = service.slice(3).replace(/^fips-|^external-1/, ""); service = "s3"; } else if (service.endsWith("-fips")) { service = service.slice(0, -5); } else if (region && /-\d$/.test(service) && !/-\d$/.test(region)) { [service, region] = [region, service]; } return [HOST_SERVICES[service] || service, region];}

// index.jsvar UNSIGNABLE_HEADERS2 = [ // These headers appear in the request, but are not passed upstream "x-forwarded-proto", "x-real-ip", // We can't include accept-encoding in the signature because Cloudflare // sets the incoming accept-encoding header to "gzip, br", then modifies // the outgoing request to set accept-encoding to "gzip". // Not cool, Cloudflare! "accept-encoding"];var HTTPS_PROTOCOL = "https:";var HTTPS_PORT = "443";var RANGE_RETRY_ATTEMPTS = 3;function filterHeaders(headers, env) { return new Headers(Array.from(headers.entries()).filter( (pair) => !UNSIGNABLE_HEADERS2.includes(pair[0]) && !pair[0].startsWith("cf-") && !("ALLOWED_HEADERS" in env && !env.ALLOWED_HEADERS.includes(pair[0])) ));}var my_proxy_default = { async fetch(request, env) { if (!["GET", "HEAD"].includes(request.method)) { return new Response(null, { status: 405, statusText: "Method Not Allowed" }); } const url = new URL(request.url); url.protocol = HTTPS_PROTOCOL; url.port = HTTPS_PORT; let path = url.pathname.replace(/^\//, ""); path = path.replace(/\/$/, ""); const pathSegments = path.split("/"); if (env.ALLOW_LIST_BUCKET !== "true") { if (env.BUCKET_NAME === "$path" && pathSegments.length < 2 || env.BUCKET_NAME !== "$path" && path.length === 0) { return new Response(null, { status: 404, statusText: "Not Found" }); } } switch (env.BUCKET_NAME) { case "$path": url.hostname = env.B2_ENDPOINT; break; case "$host": url.hostname = url.hostname.split(".")[0] + "." + env.B2_ENDPOINT; break; default: url.hostname = env.BUCKET_NAME + "." + env.B2_ENDPOINT; break; } const headers = filterHeaders(request.headers, env); const endpointRegex = /^s3\.([a-zA-Z0-9-]+)\.backblazeb2\.com$/; const [, aws_region] = env.B2_ENDPOINT.match(endpointRegex); const client = new AwsClient({ "accesskeyID": env.B2_APPLICATION_KEY_ID, "secretAccessKey": env.B2_APPLICATION_KEY, "service": "s3", "region": aws_region }); const signedRequest = await client.sign(url.toString(), { method: request.method, headers }); if (signedRequest.headers.has("range")) { let attempts = RANGE_RETRY_ATTEMPTS; let response; do { let controller = new AbortController(); response = await fetch(signedRequest.url, { method: signedRequest.method, headers: signedRequest.headers, signal: controller.signal }); if (response.headers.has("content-range")) { if (attempts < RANGE_RETRY_ATTEMPTS) { console.log(`Retry for ${signedRequest.url} succeeded - response has content-range header`); } break; } else if (response.ok) { attempts -= 1; console.error(`Range header in request for ${signedRequest.url} but no content-range header in response. Will retry ${attempts} more times`); if (attempts > 0) { controller.abort(); } } else { break; } } while (attempts > 0); if (attempts <= 0) { console.error(`Tried range request for ${signedRequest.url} ${RANGE_RETRY_ATTEMPTS} times, but no content-range in response.`); } return response; } return fetch(signedRequest); }};export { my_proxy_default as default};点击部署进行部署。

配置Worker

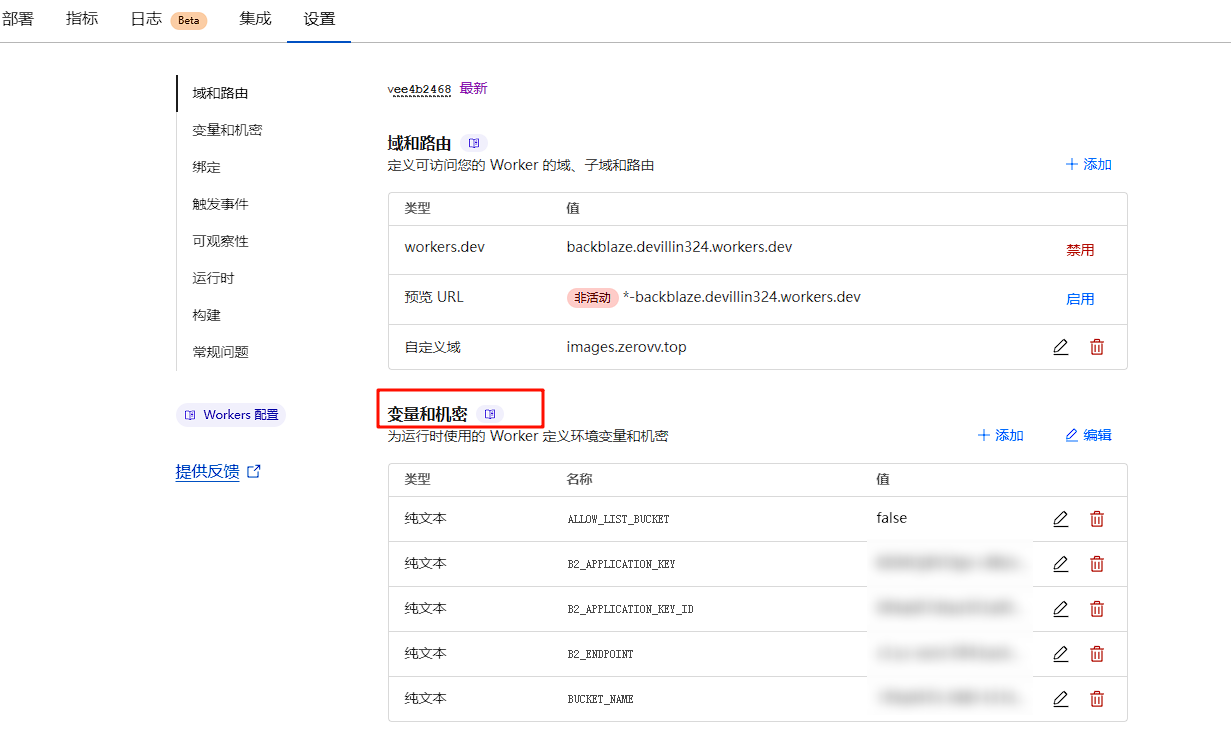

在创建的Worker页面,点击上方设置,在点击变量和机密

点击添加,添加5个变量,如下:

ALLOW_LIST_BUCKET = falseB2_APPLICATION_KEY = 创建的 application KeyB2_APPLICATION_KEY_ID = 创建的 keyIDB2_ENDPOINT = 第一步记录的 Endpoint 值(如 s3.us-west-004.backblazeb2.com)BUCKET_NAME = 桶名添加自定义域,在上方域和路由选择添加。

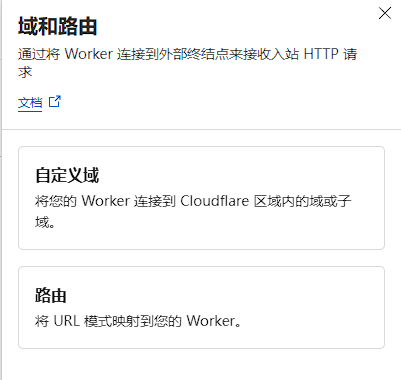

添加一个在自定义的域名地址用来访问图片(需要在Cloudflare中有记录的域名),添加成功Cloudflare会自动添加一条DNS记录指向Worker。

3.访问测试

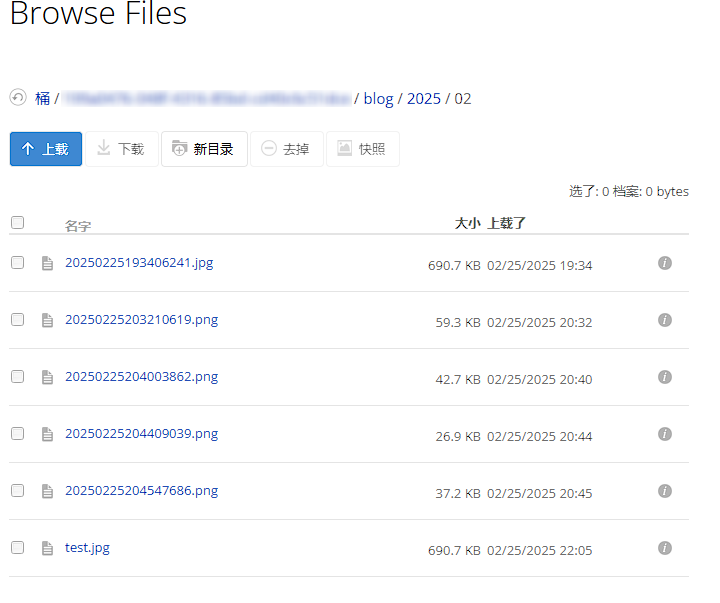

上传图片到私密桶

定位到新建的私密桶,点击 Upload/Download 按钮,然后继续点击 Upload按钮,上传一个图片。

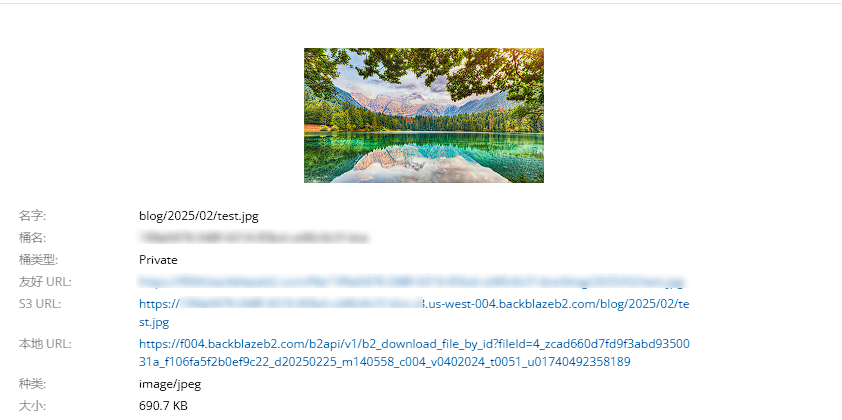

查看图片

访问测试

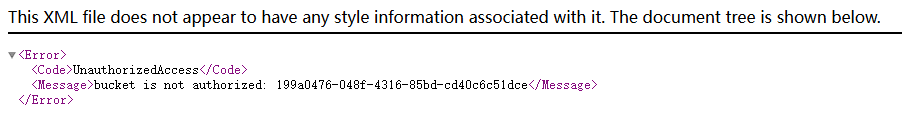

https://xxxx.s3.us-west-004.backblazeb2.com/blog/2025/02/test.jpg直接访问S3 URL是无法看见图片的。

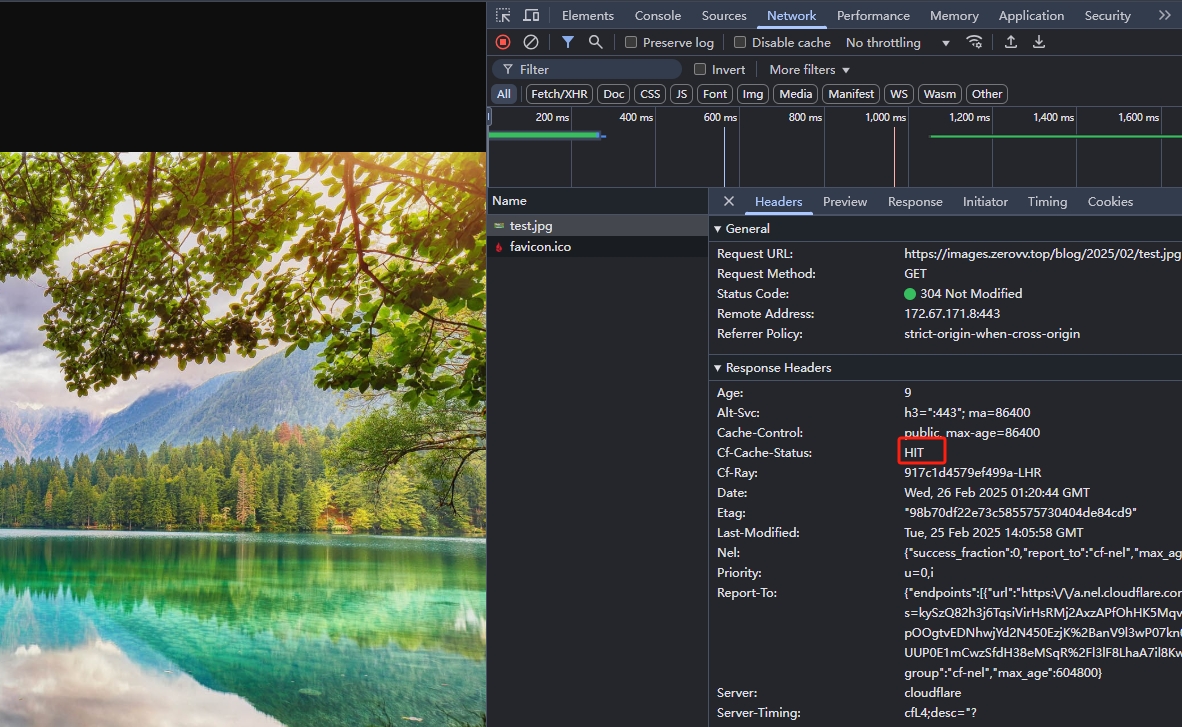

将S3 URL中的xxxx.s3.us-west-004.backblazeb2.com更换成Worker中自定义的域名,访问测试是可以查看图片的。

证明Cloudflare Worker 生效了,Cf-Cache-Status 的值为 HIT ,说明 Cloudflare 已经缓存成功。

4.配置PicGo

安装S3插件

在插件设置中,搜索S3,安装

配置S3

在PicGo设置 里,右边把 Amazon S3 启用。

配置项如下:

图床配置名: 随便起一个名字应用密钥ID: 保存的 keyID应用密钥: 保存的 application Key桶名: 创建的私密桶名文件路径: 可以根据自己喜好设置,我这里是(blog/{year}/{month}/{fullName})地区: EndPoint中的 us-west-004,根据自己节点填写自定义节点: 第一步记录的 EndPoint (我的是 https://s3.us-west-004.backblazeb2.com)代理: 留空ACL访问控制列表: private自定义输出URL模板: https://xxx/blog/{year}/{month}/{fileName}xxx为Worker中自定义的域名其余默认至此完成了Cloudflare+B2搭建图床。